AG Technical Responsibility Meeting Protocol

28 October 2022 Berlin

Programme Update

Status update from a programmatic, technological and policy perspective. Highlight the importance of AI for a programme like FCAS. Currently the programme is in the technology maturation phase. Since the last meeting, the geostrategic situation has changed fundamentally, with the Russian attack on Ukraine. This, too, will have an impact on FCAS, although the details still need to be defined. The importance of Franco-German cooperation has also been underlined once again by the war.

On the technical transferability of IHL rules in AI.

Distinction Autonomous Systems vs. Systems of Systems vs. General AI. AI application in a military context: a concrete action e.g. object recognition, which is the focus here. Policy question: Should the AI deliver a legally compliant result? Does the AI have to understand a rule? Or is implementation that conforms to the rule sufficient? I.e., legally compliant (whether) vs. legally compliant behaviour (how). To be defined politically and ethically: What decision is an AI allowed to make at all?

Questions:

How can one meaningfully hand over to humans in the rule of doubt?

How concretely must a catalogue be defined? Especially since more definition also limits flexibility? Is it defined per use, or generally and in principle?

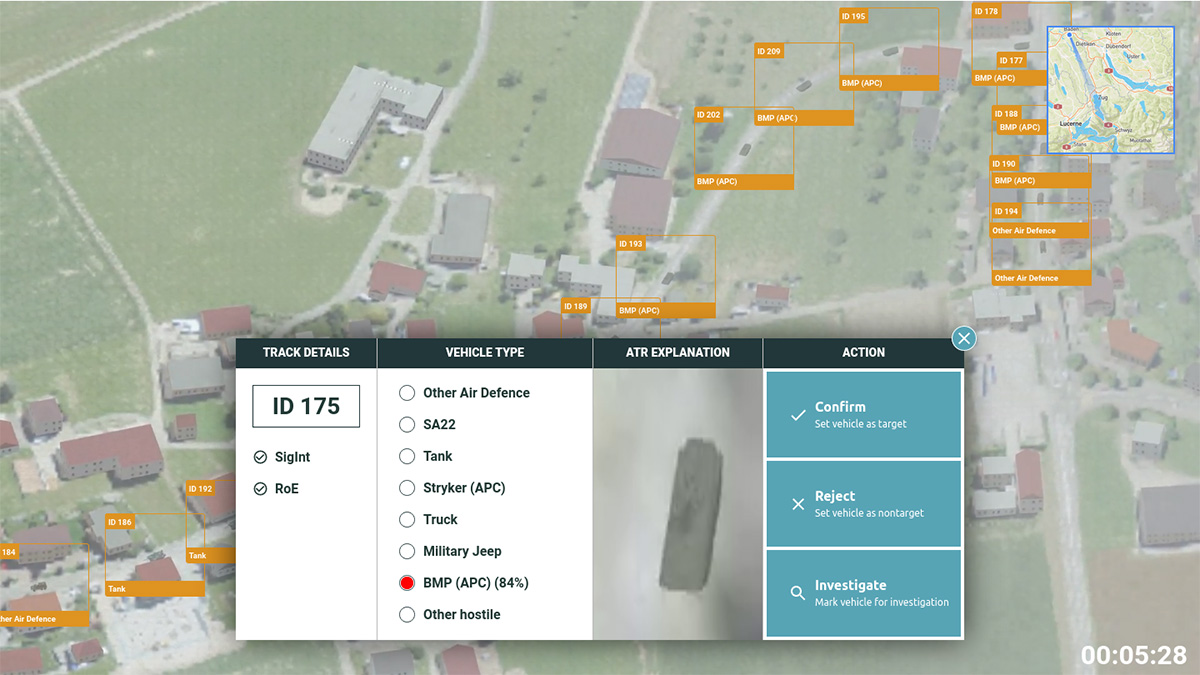

Concrete use case: object recognition of combat vehicles, application integrated into command and control system, very small part of targeting is decision-supported.

You need a basic set of data: Which nation has which weapon systems?

Interfaces have to be created to be multi-domain capable. Data needs to be in the right place at the right time, not travelling around the world.

What are the technical challenges that need to be overcome? If AI is "everywhere", it may be too complex. What happens if communications fail?

Define goals as early as possible; legal and operational elements need to be reconciled. Where do the decision-makers sit?

The Patriot system has had automation for 30 years, which does not raise significant issues; critical functions are performed by humans.

For FCAS: What should humans take over? Where do you need his control? What is the role of the human?

Depends a lot on the context of operation: depending on the context, the machine can take over more or less. What are the guard rails in which the machine should operate?

Update on demonstrator development

Making it possible to experience the ethical aspects of AI in a military deployment scenario: Update on demonstrator development

Presentation of the status quo AI demonstrator (ethical AI in a FCAS)

AI provides a lot of information and data, and most of it is very good, but it has to be processed for humans. ATR can be deceived e.g. by clever concealment of vehicles (camouflage).

Explanation of the scenario. Comparison with decisions in medicine: keyword triage, Here life and death decisions are made (with AI) and are legally covered.

IEEE 7000 Standard: Seven pillars of artificially intelligent systems: Main pillar in FCAS "Anthropocentrism". How can ethics be operationalised? Different fields of science come into play: mathematics, physics, engineering & psychology, cognitive and occupational science: for FCAS, systems engineering research questions must be asked! Responsibility is the focus. IEEE Standard Model Process for Addressing Ethical Concerns during System Design: Civil and Commercial Initiatives for AI Compliance. IEEEE 7000-2021 a series of standards, many do not affect FCAS, but perhaps there is IEEE-700x that affects us and that we can help shape. Humans remain at the centre and will always remain the decision-maker; operators want to be relieved to the maximum: trust that software works and is certified. An FCAS system must reflect such core values. However, this standard may not be fully applicable, which is why implementation should be integrated into the process. Modelling should be done and implementation measures should be taken. If unforeseen events occur, documentation enables analysis;

Way-ahead

Deal with existing Patriot MMI systems that are established. Question of doctrine: Need to establish AI application in weapon systems; ideally coordinated with international partners. Unmanned systems can be weaponised; defined human-centred but did not clarify when such systems can be deployed. Conduct a workshop to sharpen the concept of ethics in the context of FCAS (13 January 2023).